Real-Time Loihi interface for Neuromorphic Auditory Sensor and ED-Scorbot

In this project we aim to integrate a Neuromorphic Auditory Sensor (NAS) as an input to Loihi and connect an event-driven robot to the output of Loihi. These will provide a real-time communication, being able to compute audio spiking information (NAS output) in real-time and command a robot at the same time with negligible latency. The NAS could be also used in combination with a neuromorphic retina, as DVS, for sensor fusion or integration applications in real-time. The output Loihi interface will be done for the event-driven robotic arm ED-Scorbot, available at our lab. Several practical applications could be done, as for example SNN for echolocation testing, for speaker identification, speech recognition, etc. Results from the SNN implemented in Loihi could be qualified to produce different spiking rate signals at the output that could be used as joint references at the spiking PID controllers of the ED-Scorbot.

Objectives:

- Develop a specific communication module in HDL for NAS output integration to Loihi.

- Study what we need at physical level (logic levels, clocks synchrony, etc…) for real-time interfacing.

- Do a research visit, if possible, at Intel to learn and interact deeply.

- Implement a specific SDK for Loihi to give support for NAS communication.

- Integrate everything in OpenNAS and NAVIS for user support.

- Develop an HDL interface between Loihi and event-driven robotics.

- Implement in Loihi simple SNN able to produce different frequencies of spiking signals to command different joint angles in the ED-Scorbot.

- Characterize the whole system in terms of latencies and power consumption and compare it to current SOA.

- Make open-source the interface HDL and software.

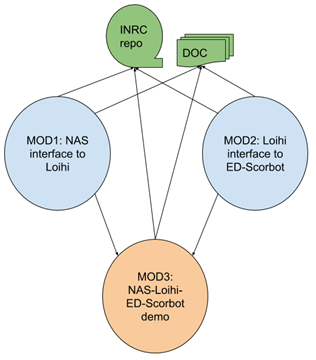

Research plan: As expressed in next figure, the project is divided in three modules, MOD1 and MOD 2 will be executed in parallel to provide input and output interfaces for Loihi. Then, MOD3 will use results from both modules for the development of a practical demonstrator. Special care on documentation and disseminations are included in these modules.

IP: Alejandro Linares Barranco / Ángel Francisco Jiménez Fernández

Start date: 30-12-2016

End date: 29-12-2020

Researchers:

Gabriel Jiménez Moreno

Antonio Ríos Navarro

Juan Pedro Domíguez Morales

Daniel Gutiérrez Galán

Enrique Piñero Fuentes

Related posts:

- Deep Neural Networks for the Recognition and Classification of Heart Murmurs Using Neuromorphic Auditory Sensors

- Neuropod: A real-time neuromorphic spiking CPG applied to robotics

- RTC at Fundación DesQbre News

- ISCAS 2017 Live Demonstration – Multilayer Spiking Neural Network for Audio Samples Classification Using SpiNNaker

- NullHop: A Flexible Convolutional Neural Network Accelerator Based on Sparse Representations of Feature Maps

- NAVIS: Neuromorphic Auditory VISualizer Tool

- A Binaural Neuromorphic Auditory Sensor for FPGA: A Spike Signal Processing Approach

- A spiking neural network for real-time Spanish vowel phonemes recognition

- ED-ScorBot

- Inter-spikes-intervals exponential and gamma distributions study of neuron firing rate for SVITE motor control model on FPGA

- Neuro-Inspired Spike-Based Motion: From Dynamic Vision Sensor to Robot Motor Open-Loop Control through Spike-VITE

- Fully neuromorphic system

- Stereo Matching: From the Basis to Neuromorphic Engineering

- ISCAS 2012 Demo